Acquiring forensic images can be one of the most dreaded steps in most analysts’ processes. It’s documentation-heavy, not terribly interesting to watch, and thanks to the ever-increasing size of commercial hard drives, it can take a LONG time. Thanks to the unrealistic portrayals of this process in movies and TV shows, it’s not uncommon for an unfamiliar person to repeatedly as “is that done yet?”… a few hundred times. This article explains a quick tip that can be used to cut through the math and unit conversion needed, and accurately calculate the time you’ll be watching the proverbial progress bar.

It never hurts to estimate the time it will take you to create the image beforehand so those less educated onlookers can perhaps stay at the office for the first few hours or so. This usually involves converting lots of units for drive size (gigabytes -> bits), transfer speed (megabits per second), and wall clock time (minutes and hours). I won’t lie: I usually mess this calculation up a few times – every time. However, thanks to a creative use of a Google feature, we can skip that error-prone conversion and generate reliable and immediate time estimates for image creation or download operations.

Google rolled out some most helpful calculator functions within their search page, and recently added “instant” search results. These will allow you to enter “5 + 2” into the search box and get an answer.

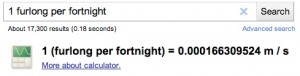

This is not too interesting for simple math, of course. What’s much more useful is that you can specify units and Google will automatically handle and reduce the terms in its result. So the age-old question involving furlongs per fortnight becomes child’s play.

Let’s go back to our forensic image example, though. Common question: How long will it take to create a forensic copy of a 500GB hard drive, using a Firewire 800 connection? Just enter “500 GB / 800 Mbps” and you’ve got your answer. (Note: be sure your capital and lowercase letter “B”s are correct. “B” = Bytes, “b” = bits!)

For what it’s worth, I usually estimate transfers at about 80% of their listed speeds. This usually accounts for overhead and other normal decreases. However, as you become more familiar with the hardware and software you use to create the images, you’ll establish your own preferred adjustments.

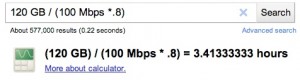

This calculation can also be a nice trick to use when transferring data over the network, if a handy progress meter isn’t available. Are you using ‘nc’ (netcat) to transfer a dd image of a 120GB drive from a running server, using a 100MBps network? Better bring extra coffee – it’s going to take a while!

If, before the image was started, we knew the transfer would take nearly 3 and a half hours, it might lead us to reconsider either a faster networking option or a whole new solution.

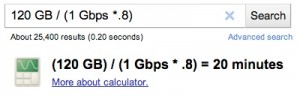

For example, by switching to a 1Gbps ethernet port on the server, the same 120GB drive image will take just 20 minutes.

If that’s not a viable option, we might lean toward taking the server down and using Firewire 800 for a bit under a half hour.

If you’re onsite and in the middle of a transfer, Google can still help, by using instant search on your mobile device. Below, we’re 50% through a 60GB transfer using FTP over a 100MBps network connection. The FTP software was telling us that the sustained throughput so far was 6.5MBps. A simple calculation shows we’ve got about an hour and 15 minutes to go.

As anyone who’s created forensic images knows, Murphy will rear his proverbial head when you least want it. That goes double when you’re doing something important. But, by knowing how to use Google’s search calculator to provide updated time estimates, you can provide updated information to your colleagues on the ground, taking a degree of uncertainty out of the equation.

Footnote: A friend also mentioned that the same calculations can be done with the Wolfram|Alpha application on the iPhone. (Hat tip to @theonehiding.) If you know of others that can do transparent unit conversion, please mention them in the comments.

I made a free iPhone App for those that want to monitor computer ongoing processes like I do (http://bit.ly/usthebarlite).

Before “The Bar”, I used to calculate the remaining time by doing a simple rule of three and now I just have to take two pictures of the progress bar…

hi. nice article.

seems you’re familiar with unix maybe i can ask, what’s the best unix tool to transfer 300GB data over a 1Gbps fiber? netcat?

i was using cpio over NFS and it takes the whole day hence i’m looking for better alternatives.

Thanks – hope it’s helpful.

To move that kind of data, go as raw as you can. Every few bits of protocol overhead will eat away the performance, so with 300GB, that would add up to many hours. As you found, cpio and NFS are both pretty inefficient for that kind of process.

I’d recommend using netcat, with dd on each end. If you’re moving multiple files, tar them up (don’t compress), and pipe that tar directly across the network. (If you compress, the bottleneck becomes your CPUs, not the network, so unless it’s 300GB of text, just skip it.)

Something like this for moving multiple files:

[root@source ~]# tar cf - /path/to/source | nc dest_ip 2020[root@dest ~]# nc -l 2020 | tar xf -Or this for a monolithic file:

[root@source ~]# dd if=source_file of=- | nc dest_ip 2020[root@dest ~]# nc -l 2020 | dd if=- of=dest_file(I haven’t tested these commands right now – going from memory.)

If you’re unsure of the integrity of the data link, or otherwise need to do source/dest verification, use dc3dd instead of dd. For the tar example, you’d need to put dc3dd in between the tar and the nc pipes.

Hope that helps somewhat!

I thought a little more about this, and what different options I used in similar situations. If you are moving lots of smaller files, you may want to consider just sucking up the inefficiency and going with rsync (via TCP socket if possible, but it also works over SSH/RSH or direct filesystem access). Using this tool, you’d get a basic level of checksumming, rendering the md5 command unnecessary. Also, it would make resuming an interrupted transfer much easier, since rsync handles that process very efficiently.

I’m pretty sure that NFS is the bottleneck, in your situation, though.